When it comes to manipulating sound, the webaudio API is really nice and allows any web developer to do almost any audio work.

The legacy ScriptProcessorNode API

It basically works using audio nodes that are interconnected: audio passes through these nodes that manipulate, generate, or simply analyse the sound.

This produces an audio graph, like this one:

You can use anything that generates sound as a source:

- an existing audio/video tag

- your webcam/mic

- an existing audio file

You can also generate your own buffer. To do that, the webaudio group created the ScriptProcessorNode which is a special object that allows to manipulate the sound buffer using any JavaScript code:

// create a new script audio node with a 4096 samples buffer length

let mixerNode = audioContext.createScriptprocessorNode(4096);

mixerNode.onaudioprocess = function(audioEvent) {

let outputBuffer = audioEvent.outputBuffer.getChannelData(0),

inputBuffer = audioEvent.inputBuffer.getChannelData(0);

// manipulate audio here

};

It’s easy and works quite well but comes with a few caveats:

- it was under-specified, leading to browsers having their own implementations, which in the end leads to unconsistent results when your code is executed in different browsers

- it uses the main (UI) thread, so when this thread busy working on user interaction, it can lead to audio stuttering, drop-outs, etc… like wise, important buffer manipulation can make your app less responsive

- the API also uses double-buffering which increases latency

- since you have access to the DOM, you may also modify it hundreds times a second, which can be real bad for performance

Because of this a new API has been designed to address these issues, and it’s called AudioWorklet.

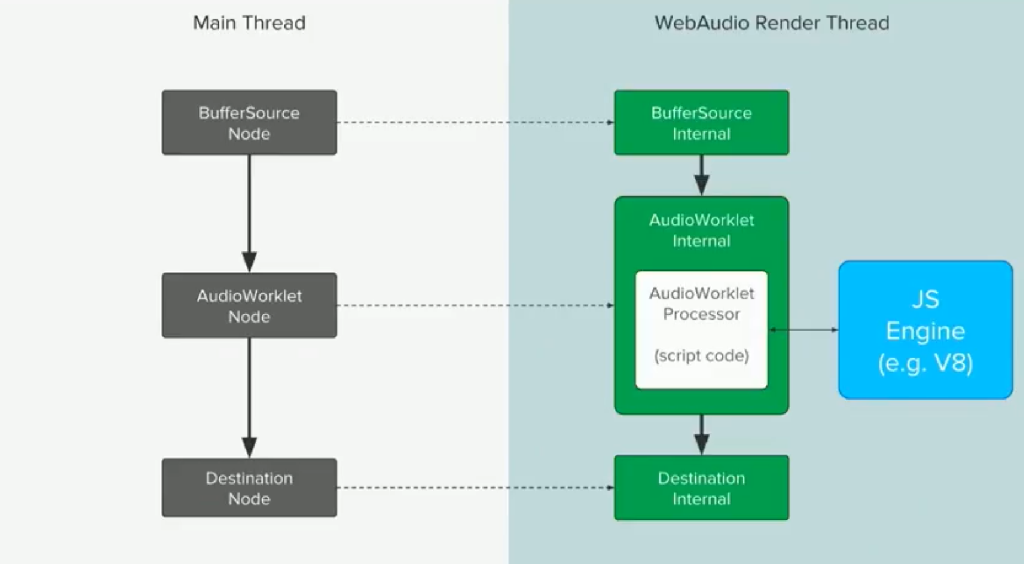

The new AudioWorklet API

AudioWorklet moves the script processing into an audio thread:

Here are the benefits of AudioWorklet API:

- runs in the audioRender thread

- is better specified

- supports audioParams natively

Since it runs in a special thread, some extra work is necessary to load your code and use it in a processor node.

First, you have to create a new file (you could also pass a blob) that uses or extends the AudioWorkletProcessor class:

class PTModuleProcessor extends AudioWorkletProcessor{

constructor() {

super();

}

process(inputs, outputs, params) {

// this method gets automatically called with a buffer of 128 frames

// audio process goes here

// if you don't return true, webaudio will stop calling your process method

return true;

}

}

// you also need to register your class so that it can be intanciated from the main thread

registerProcessor('mod-processor', PTModuleProcessor);

When you’re done with your processor, using it is as simple as that:

this.workletNode = new AudioWorkletNode(this.context, 'mod-processor', {

// you have to specify the channel count for each input, so by default only 1 is needed

outputChannelCount:[2]

});

Only one problem remains now: how to communicate between the main thread and you processing thread?

Well, just like you would do with any worker, using messages: both the Processor (running on the audio thread) and the AudioWorkletNode (running in the main thread) have a property called port which can be used with the usual postMessage() and onmessage.

Not that since data cannot be shared with both threads, if you pass a buffer, a copy of this buffer will be sent.

Browser Support

Even though the ScriptProcessorNode API has been deprecated for 4 years now, the majority of the browsers only support this one. As I write this article, only Chrome supports the AudioWorklet API in its stable branch.

This doesn’t mean that you cannot use it now since several polyfills already exist, like audioworklet-polyfill by Google and audioworklet-polyfill by jariseon.

Real world example

In order to train myself, I wrote a little Soundtracker module player that makes use of the new AudioWorklet API: a demo and the full source-code can be found here:

Update: it seems the latest Chrome (69) has a nasty bug which refuses to update the processor’s module after you modified it (outside of Chrome) and reload the page, even though devtools are open and the Disable cache option is enabled.

While waiting for Google to fix it, I’ll had to get back to Chrome 68.